-------

Evening all, I thought I'd take a few minutes to outline five key things we found out about the new History GCSEs. OK, it's really four and a question, but hey ho!

1) Pupils this year did pretty much the same as last year overall

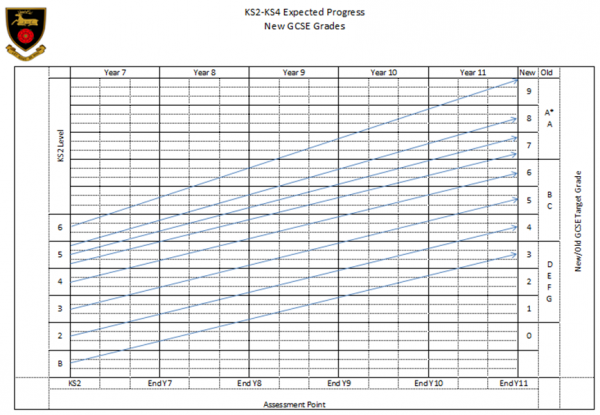

No changes here, and no surprise given the details below. I have updated the chart to show the 2019 results side by side.

This is not really a surprise as Ofqual demanded a statistical tie between 2017 and 2018. Therefore almost the same proportion of kids got a G/1 or greater as last year, and the same for C/4 and A/7.

Of course, this does not mean everyone’s results will have been stable. It is common with new specifications for some schools to do much better than normal and some to do much worse. This is usually because some schools manage to match what examiners were looking for more closely. It is almost impossible to second guess this precisely in the first year of a specification as examiners refine their expectations during first marking and grading discussions.

Takeaway lesson:

NO CHANGES HERE

Read the examiners’ reports closely and get some papers back if you were not happy with your overall results.

2) Your choice of board made almost no difference to overall grades (on average)

There is very little change here this year. The distribution of awards per board seem to be fairly static and this reflects the fact that awards are still tied to pupil prior attainment. From this we can therefore infer that centres doing OCR A tend to have cohorts with higher prior attainment and that therefore a greater proportion of higher grades can be awarded.

Discounting the statement at the end of the last point: because the boards all had to adhere to this basic rule when awarding grades, the differences between boards are also non-existent. If you look at the detail you will see that some boards did deviate from the 2017 figures, however this is because they have to take prior attainment into account. So, the reason that OCR A seem to have awarded more 4+ and 7+ grades would suggest that more high attaining pupils took these exams. By contrast OCR B probably awarded slightly fewer 4+ and 7+ grades due to a weaker cohort. This might imply that OCR centres chose their specification based on the ability range of their pupils (though this is pure speculation). AQA and Edexcel pretty much fit the Ofqual model, suggesting they had a broadly representative sample of pupils.

RSS Feed

RSS Feed